Exploring A Model Type Detection Attack against Machine Learning as A Service

DOI:

https://doi.org/10.64509/jicn.12.27Keywords:

Machine learning security, deep learning models, machine learning as a service, model type detection, linear modelsAbstract

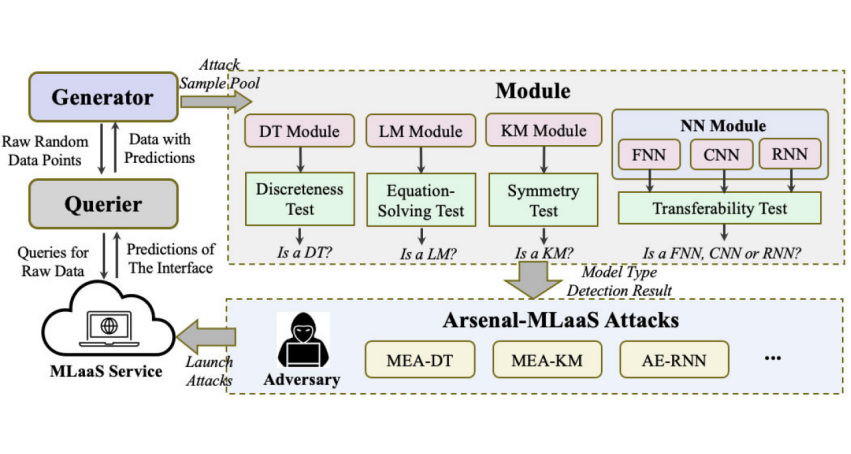

Recently, Machine-Learning-as-a-Service (MLaaS) systems are reported to be vulnerable to varying novel attacks, e.g., model extraction attacks and adversarial examples. However, in our investigation, we notice that the majority of MLaas attacks are not as threatening as expected due to model-type-sensitive problem. Literally speaking, many MLaaS attacks are designed for only a specific type of models. Without model type info as default prior knowledge, these attacks suffer from great performance degradation, or even become infeasible! In this paper, we demonstrate a novel attack method, named SNOOPER, to resolve the model-type-sensitive problem of MLaaS attacks. Specifically, SNOOPER is integrated with multiple self-designed model-type-detection modules. Each module can judge whether a given black-box model belongs to a specific type of models by analyzing its query-response pattern. Then, after proceeding with all modules, the attacker can know the type of its target model in the querying stage, and accordingly choose the optimal attack method. Also, to save budget, the queries can be re-used in the latter attack stage. We call such a kind of attack as model-type-detection attack. Finally, we experiment with SNOOPER on some popular model classes, including decision trees, linear models, non-linear models and neural networks. The results show that SNOOPER is capable of detecting the model type with more than 90% accuracy.

Downloads

References

[1] Xie, S., Xue, Y., Zhu, Y., Wang, Z.: Skyml: A mlaas federation design for multicloud-based multimedia analytics. IEEE Transactions on Multimedia 27, 2463–2476 (2025) https://doi.org/10.1109/TMM.2024.3521768

[2] Lin, Y., Zhang, T., Mao, Y., Zhong, S.: Crossnet: A low-latency mlaas framework for privacy-preserving neural network inference on resource-limited devices. IEEE Transactions on Dependable and Secure Computing 22(2), 1265–1280 (2025) https://doi.org/10.1109/TDSC.2024.3431590

[3] Wang, X., Liu, B., Bi, X., Xiao, b.: Seam-carving localization in digital images. Journal of Intelligent Computing and Networking 1(1), 28–42 (2025) https://doi.org/10.64509/jicn.11.17

[4] Tramer, F., Zhang, F., Juels, A., Reiter, M.K., Risten-part, T.: Stealing machine learning models via prediction apis. In Proceedings of the 25th USENIX Conference on Security Symposium, pp. 601–618 (2016)

[5] Chandrasekaran, V., Chaudhuri, K., Giacomelli, I., Jha, S., Yan, S.: Exploring connections between active learning and model extraction. In Proceedings of the 29th USENIX Conference on Security Symposium, pp. 1309–1326 (2020)

[6] Shen, Y., Zhuang, Z., Yuan, K., Nicolae, M.-I., Navab, N., Padoy, N., Fritz, M.: Medical multimodal model stealing attacks via adversarial domain alignment. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), pp. 6842–6850 (2025). https://doi.org/10.1609/aaai.v39i7.32734

[7] Eisenhofer, T., Schonherr, L., Frank, J., Speckemeier, L., Kolossa, D., Holz, T.: Dompteur: Taming audio adversarial examples. In 30th USENIX Security Symposium (USENIX Security 21), pp. 2309–2326 (2021)

[8] Zhang, C., Zhou, L., Xu, X., Wu, J., Liu, Z.: Adversarial attacks of vision tasks in the past 10 years: A survey. ACM Computing Surveys 58(2), 1–37 (2025) https://doi.org/10.1145/3743126

[9] Chen, K., Guo, S., Zhang, T., Xie, X., Liu, Y.: Stealing deep reinforcement learning models for fun and profit. In Proceedings of the 2021 ACM Asia Conference on Computer and Communications Security, pp. 307–319 (2021). https://doi.org/10.1145/3433210.3453090

[10] Li, Z., Zhang, Y.: Membership leakage in label-only exposures. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, pp. 880–895 (2021). https://doi.org/10.1145/3460120.3484575

[11] Lowd, D., Meek, C.: Adversarial learning. In Proceedings of the Eleventh ACM SIGKDD International Conference on Knowledge Discovery in Data Mining, pp. 641–647 (2005)

[12] Xie, Y., Gu, Z., Fu, X., Wang, L., Han, W., Wang, Y.: Misleading sentiment analysis: Generating adversarial texts by the ensemble word addition algorithm. In 2020 International Conferences on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData) and IEEE Congress on Cybermatics (Cybermatics), pp. 590–596 (2020)

[13] Fredrikson, M., Lantz, E., Jha, S., Lin, S., Page, D., Ristenpart, T.: Privacy in pharmacogenetics: An End-to-End case study of personalized warfarin dosing. In 23rd USENIX Security Symposium (USENIX Security 14), pp. 17–32 (2014)

[14] Chen, H., Zhang, H., Boning, D., Hsieh, C.-J.: Robust decision trees against adversarial examples. In International Conference on Machine Learning, pp. 1122–1131 (2019)

[15] Cheng, M., Le, T., Chen, P.-Y., Zhang, H., Yi, J., Hsieh, C.-J.: Query-efficient hard-label black-box attack: An optimization-based approach. In International Conference on Learning Representation (ICLR), pp. 1–14 (2019)

[16] Fredrikson, M., Jha, S., Ristenpart, T.: Model inversion attacks that exploit confidence information and basic countermeasures. In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, pp. 1322–1333 (2015). https://doi.org/10.1145/2810103.2813677

[17] Papernot, N., McDaniel, P., Goodfellow, I., Jha, S., Celik, Z.B., Swami, A.: Practical black-box attacks against machine learning. In Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security, pp. 506–519 (2017). https://doi.org/10.1145/3052973.3053009

[18] Zhang, Y., Jia, R., Pei, H., Wang, W., Li, B., Song, D.: The secret revealer: Generative model-inversion attacks against deep neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 253–261 (2020). https://doi.org/10.1109/CVPR42600.2020.00033

[19] Wang, K.-C., Fu, Y., Li, K., Khisti, A., Zemel, R., Makhzani, A.: Variational model inversion attacks. In Proceedings of the 35th International Conference on Neural Information Processing Systems, pp. 9706–9719 (2021)

[20] Esser, P., Fleissner, M., Ghoshdastidar, D.: Nonparametric representation learning with kernels. In Proceedings of the AAAI Conference on Artificial Intelligence, pp. 11910–11918 (2024). https://doi.org/10.1609/aaai.v38i11.29077

[21] Miura, T., Shibahara, T., Yanai, N.: Megex: Datafree model extraction attack against gradient-based explainable ai. In Proceedings of the 2nd ACM Workshop on Secure and Trustworthy Deep Learning Systems, pp. 56–66 (2024). https://doi.org/10.1145/3665451.3665533

[22] Andriushchenko, M., Croce, F., Flammarion, N., Hein, M.: Square attack: a query-efficient black-box adversarial attack via random search. In European Conference on Computer Vision, pp. 484–501 (2020). Springer

[23] Wu, W., Su, Y., Chen, X., Zhao, S., King, I., Lyu, M.R., Tai, Y.-W.: Boosting the transferability of adversarial samples via attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1161–1170 (2020). https://doi.org/10.1109/CVPR42600.2020.00124

[24] Suya, F., Chi, J., Evans, D., Tian, Y.: Hybrid batch attacks: Finding black-box adversarial examples with limited queries. In 29th USENIX Security Symposium (USENIX Security 20), pp. 1327–1344 (2020)

[25] Zhou, M., Wu, J., Liu, Y., Liu, S., Zhu, C.: Dast: Data-free substitute training for adversarial attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 234–243 (2020). https://doi.org/10.1109/CVPR42600.2020.00031

[26] Takemura, T., Yanai, N., Fujiwara, T.: Model extraction attacks on recurrent neural networks. Journal of Information Processing 28, 1010–1024 (2020)

[27] Correia-Silva, J.R., Berriel, R.F., Badue, C., Souza, A.F., Oliveira-Santos, T.: Copycat cnn: Stealing knowledge by persuading confession with random nonlabeled data. In 2018 International Joint Conference on Neural Networks (IJCNN), pp. 1–8 (2018). https://doi.org/10.1109/IJCNN.2018.8489592

[28] Cong, K., Das, D., Park, J., Pereira, H.V.: Sortinghat: Efficient private decision tree evaluation via homomorphic encryption and transciphering. In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, pp. 563–577 (2022). https://doi.org/10.1145/3548606.3560702

[29] Rakin, A.S., Chowdhuryy, M.H.I., Yao, F., Fan, D.: Deepsteal: Advanced model extractions leveraging efficient weight stealing in memories. In 2022 IEEE Symposium on Security and Privacy (SP), pp. 1157–1174 (2022). IEEE

[30] Yu, H., Yang, K., Zhang, T., Tsai, Y.-Y., Ho, T.-Y., Jin, Y.: Cloudleak: Large-scale deep learning models stealing through adversarial examples. In Network and Distributed System Security (NDSS) Symposium, pp. 1–16 (2020). https://doi.org/10.14722/ndss.2020.24178

[31] Wu, T., Luo, T., Wunsch, D.C.: Gnp attack: Transferable adversarial examples via gradient norm penalty. In 2023 IEEE International Conference on Image Processing (ICIP), pp. 3110–3114 (2023). https://doi.org/10.1109/ICIP49359.2023.10223158

[32] Gangurde, R.A.: Web page prediction using adaptive deer hunting with chicken swarm optimization based neural network model. International Journal of Modeling, Simulation, and Scientific Computing 13(06), 2250064 (2022) https://doi.org/10.1142/S1793962322500647

[33] Akhavan, A., Chzhen, E., Pontil, M., Tsybakov, A.: A gradient estimator via l1-randomization for online zero-order optimization with two point feedback. Advances in Neural Information Processing Systems 35, 7685–7696 (2022)

[34] Byun, J., Cho, S., Kwon, M.-J., Kim, H.-S., Kim, C.: Improving the transferability of targeted adversarial examples through object-based diverse input. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15244–15253 (2022). https://doi.org/10.1109/CVPR52688.2022.01481

[35] Lu, F., Zhu, K., Zhai, W., Cao, Y., Zha, Z.-J.: Likelihood-aware semantic alignment for full-spectrum out-of-distribution detection. Journal of Intelligent Computing and Networking 1(1), 1–13 (2025) https://doi.org/10.64509/jicn.11.10

[36] Tao, J., Shokri, R.: Range membership inference attacks. In 2025 IEEE Conference on Secure and Trustworthy Machine Learning (SaTML), pp. 346–361 (2025). https://doi.org/10.1109/SaTML64287.2025.00026

[37] Hou, S., Li, S., Jahani-Nezhad, T., Caire, G.: Priroagg: Achieving robust model aggregation with minimum privacy leakage for federated learning. IEEE Transactions on Information Forensics and Security 20, 5690–5704 (2025) https://doi.org/10.1109/TIFS.2025.3577498

[38] Chaudhari, S., Aggarwal, P., Murahari, V., Rajpurohit, T., Kalyan, A., Narasimhan, K., Deshpande, A., Silva, B.: Rlhf deciphered: A critical analysis of reinforcement learning from human feedback for llms. ACM Computing Surveys 58(2) (2025) https://doi.org/10.1145/3743127

[39] Rivera, A., Uribe, J.: Graph based machine learning for anomaly detection in iot security. Electronics, Communications, and Computing Summit 3(2), 40–48 (2025)

[40] Adibi, M.A.: Single and multiple outputs decision tree classification using bi-level discrete-continues genetic algorithm. Pattern Recognition Letters 128, 190–196 (2019) https://doi.org/10.1016/j.patrec.2019.09.001

[41] Mugunthan, S., Vijayakumar, T.: Design of improved version of sigmoidal function with biases for classification task in elm domain. Journal of Soft Computing Paradigm (JSCP) 3(02), 70–82 (2021) https://doi.org/10.36548/jscp.2021.2.002

[42] Chang, D., Sun, S., Zhang, C.: An accelerated linearly convergent stochastic l-bfgs algorithm. IEEE transactions on neural networks and learning systems 30(11), 3338–3346 (2019) https://doi.org/10.1109/TNNLS.2019.2891088

[43] Wan, J., Wang, Q., Chan, A.B.: Kernel-based density map generation for dense object counting. IEEE Transactions on Pattern Analysis and Machine Intelligence 44(3), 1357–1370 (2020) https://doi.org/10.1109/TPAMI.2020.3022878

[44] Zhong, F., Cheng, X., Yu, D., Gong, B., Song, S., Yu, J.: Malfox: Camouflaged adversarial malware example generation based on conv-gans against black-box detectors. IEEE Transactions on Computers 73(4), 980–993 (2023) https://doi.org/10.1109/TC.2023.3236901

[45] Nowroozi, E., Mekdad, Y., Berenjestanaki, M.H., Conti, M., El Fergougui, A.: Demystifying the transferability of adversarial attacks in computer networks. IEEE Transactions on Network and Service Management 19(3), 3387–3400 (2022) https://doi.org/10.1109/TNSM.2022.3164354

[46] Liu, Y., Wen, R., He, X., Salem, A., Zhang, Z., Backes, M., De Cristofaro, E., Fritz, M., Zhang, Y.: Ml-doctor: Holistic risk assessment of inference attacks against machine learning models. In 31st USENIX Security Symposium (USENIX Security 22), pp. 4525–4542 (2022)

[47] Hassan, M.U., Rehmani, M.H., Chen, J.: Differential privacy techniques for cyber physical systems: a survey. IEEE Communications Surveys & Tutorials 22(1), 746–789 (2019) https://doi.org/10.1109/COMST.2019.2944748

[48] Lv, Z., Chen, D., Cao, B., Song, H., Lv, H.: Secure deep learning in defense in deep-learning-as-a-service computing systems in digital twins. IEEE Transactions on Computers 73(3), 656–668 (2023) https://doi.org/10.1109/TC.2021.3077687

[49] Carlini, N., Chien, S., Nasr, M., Song, S., Terzis, A., Tramer, F.: Membership inference attacks from first principles. In 2022 IEEE Symposium on Security and Privacy (SP), pp. 1897–1914 (2022). https://doi.org/10.1109/SP46214.2022.9833649

Downloads

Published

Issue

Section

License

Copyright (c) 2025 Authors

This work is licensed under a Creative Commons Attribution 4.0 International License.